Damien Martins Gomes

AI Scientist @ Thales

About

AI Scientist at Thales Research & Technology, I work at the intersection of deep learning and signal processing, designing intelligent audio systems under real-world constraints. My research spans speech enhancement, embedded and real-time models, as well as generative audio systems where efficiency, robustness, and reliability are critical.

I recently completed a research master's degree in Computer Science at Concordia University, alongside a dual degree in Aerospace Engineering specialized in Telecommunications at IPSA Toulouse. This interdisciplinary background allows me to bridge physical systems, signal processing, and modern machine learning to tackle complex, high-impact problems.

Previously at Mila – Quebec AI Institute, I developed AdaFisher, a second-order optimization algorithm accepted at ICLR 2025. Driven by curiosity and a strong research mindset, I enjoy turning raw signals into intelligent systems and exploring how AI can robustly perceive and understand the world—one waveform at a time.

Education

Concordia University

MCompSc: Master of Research in Optimization and Machine Learning

September 2023 — April 2025

CGPA: 4.1/4.3Thesis awarded with "Outstanding" distinction

Relevant Coursework

- Representation Learning (MILA Institute)

- Geometric Data Analysis (MILA Institute)

- Algorithm Design Techniques

- Parallel Programming

IPSA Toulouse, France

MSc in Aerospace Engineering: Embedded Systems and Signal Processing

September 2019 — April 2025

CGPA: 3.8/4.0Obtained with "Outstanding" distinction

Relevant Coursework

- Non-Linear Optimization, Numerical Linear Algebra

- Distributed Intelligent Systems, Guided Propagation

- Advanced FPGA Circuits, On-board Networks

- Real-Time Information Systems

Experience

Thales

AI Scientist

May 2025 — Now

- Designed end-to-end audio AI systems, from research and model development to integration support on edge and embedded devices

- Developed lightweight, real-time speech enhancement models under strict latency, memory, and compute constraints for embedded deployment

- Conducted research on generative audio models, including flow matching and audio foundation models, and adapted them to multiple downstream tasks

- Investigated hallucination phenomena in generative audio models, focusing on their quantification and mitigation

- Worked with diverse model architectures such as Transformers and Mamba, contributing to three industrial patents

MILA — Quebec AI Institute

AI/ML Research Student

September 2023 — April 2025

- Developed AdaFisher, a novel second-order optimizer that significantly improves performance over Adam in image classification and language modeling

- Designed and ran large-scale training experiments on vision and LLMs (GPT-1), optimizing hyperparameters, fine-tuning architectures, and scaling distributed training

- Led theoretical research on neural network optimization, curvature-aware updates, MVPs, and second-order methods

- Served as a reviewer for top-tier conferences: ECCV 2024, NeurIPS 2024, CVPR 2025, ICML 2025

- Supervised by Dr. Eugene Belilovsky, Dr. Guy Wolf, and Dr. Mahdi S. Hosseini

Yale University × MILA

AI/ML Research Collaborator

September 2024 — January 2025

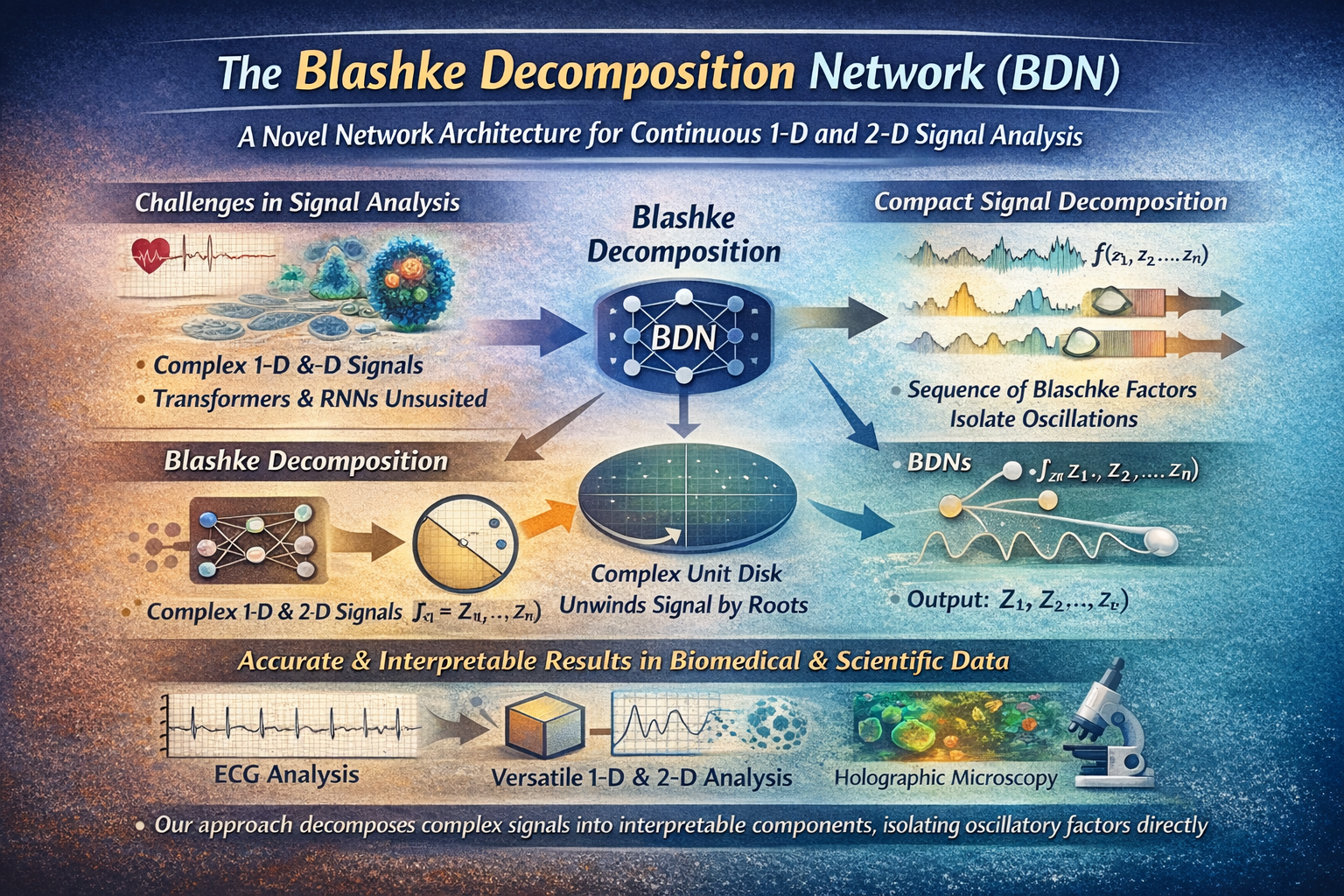

- Led research on developing novel neural network architectures based on an innovative mathematical framework

- Implemented and optimized architectures within LLMs to enhance performance and computational efficiency

- Developed methods to capture complex oscillatory patterns in NLP and speech processing tasks

- Collaborated with Dr. Yanlei Zhang and Yale University research team

Murmuration SAS

Machine Learning Engineer

June 2023 — August 2023

- Contributed to the European research project "DeepCube" developing a sophisticated price engine model

- Implemented constrained optimization algorithms using SciPy and reinforcement learning models based on DQN

- Integrated a Generative Adversarial Network (GAN) for synthetic data generation

EuroMoonMars

Commander / Analog Astronaut

June 2021 — September 2021

- Commanded the EMMPOL7 mission simulating lunar base conditions, organized by EMM, ILEWG, and AATC

- Conducted reinforcement learning experiments on rover navigation in challenging terrain

- Designed payload for a lunar launcher in CATIA for 3D printing

Research

AdaFisher: Adaptive Second-Order Optimization via Fisher Information

We propose AdaFisher, a novel second-order optimizer that integrates Fisher information into the Adam family. AdaFisher achieves consistently faster convergence and improved generalization compared to Adam, AdamW, AdaHessian, and Shampoo across image classification and language modeling tasks, while remaining computationally efficient.

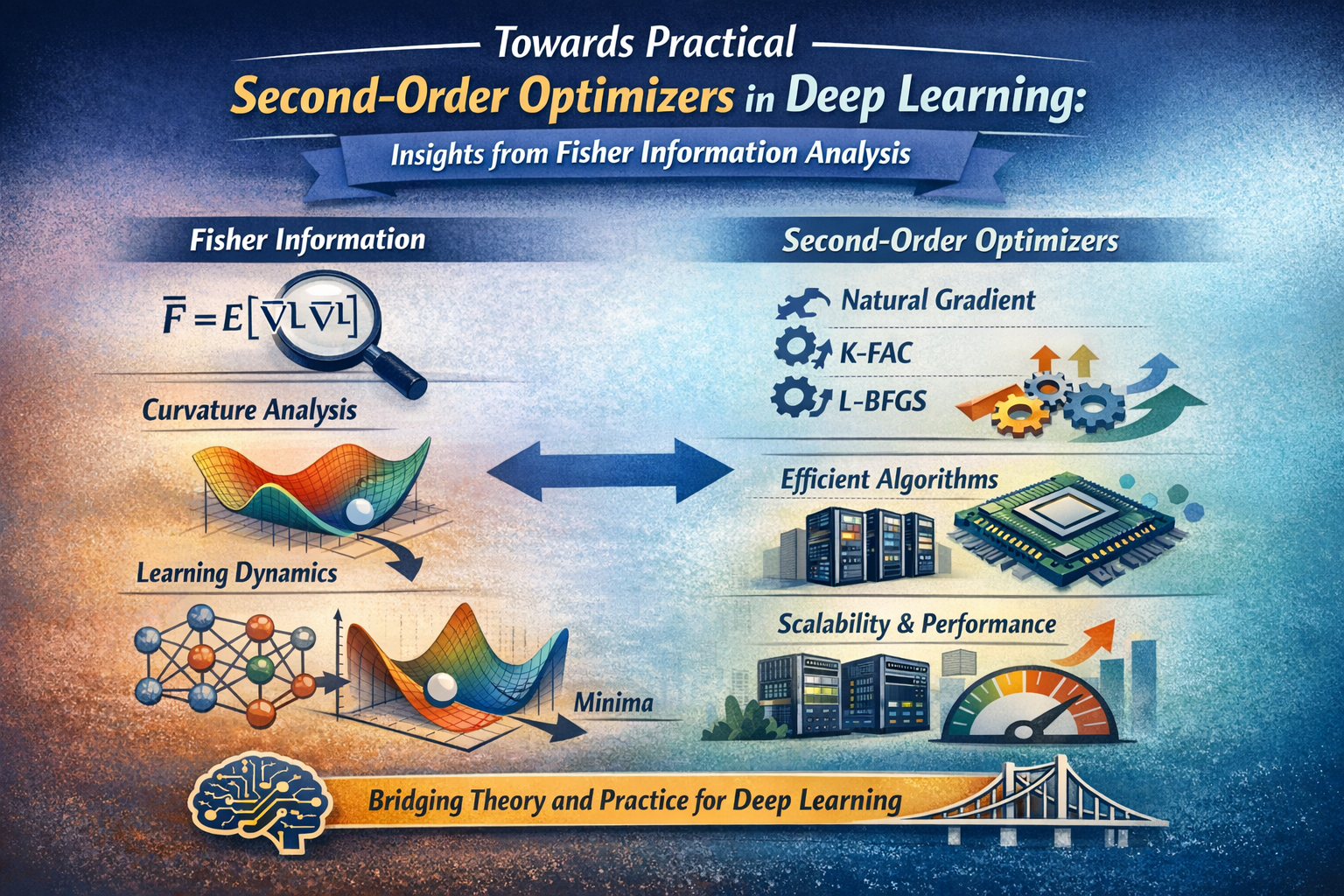

Towards Practical Second-Order Optimizers in Deep Learning: Insights from Fisher Information

This thesis investigates the dynamics of deep learning optimization and the trade-offs between convergence speed, stability, and computational efficiency. It provides a unified analysis of zeroth-, first-, and second-order optimization methods, with a particular focus on curvature-aware approaches. The work culminates in a detailed theoretical and empirical study of AdaFisher, the optimizer introduced in my ICLR 2025 paper.

BDN: Blaschke Decomposition Networks

We introduce Blaschke Decomposition Networks (BDNs), a novel neural architecture for learning from continuous real-valued and complex-valued 1-D and 2-D signals—data modalities that are poorly matched to conventional transformers, convolutional, or recurrent networks. BDNs leverage the Blaschke decomposition to iteratively “unwind” a signal into interpretable oscillatory components defined by its roots in the complex unit disk. The network is trained to predict these roots directly, yielding compact, interpretable representations. We extend the framework from 1-D signals to 2-D data via a wedge-based factorization. Experiments on biomedical sensor data, including electrocardiograms and phase holographic microscopy, demonstrate strong predictive performance with significantly fewer parameters than existing architectures.

Honors & Awards

Fonds de recherche du Québec (FRQNT) Masters (B1X) Scholarship

2024 — 2025

Concordia University Split Merit Scholarship

2023 — 2024

Ivan Velan Student Award

2023 · Outstanding athletic performance

Contact

Location

Thales Research & Technology

1 Avenue Augustin Fresnel

Palaiseau, 91120, France